This article talks about the famous Transformers architecture in Natural Language Processing.

Attention is all you need - The Transformer

I am Raviteja Ganta, a data scientist. This is the place where I write articles in area of deep learning.

This article talks about the famous Transformers architecture in Natural Language Processing.

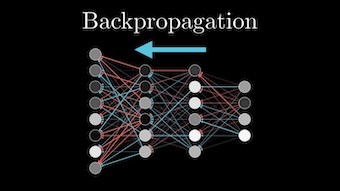

Backpropagation is workhorse behind training neural networks and understanding what’s happening inside this algorithm is atmost importance for efficient learning. This post gives in depth explanation of Backpropagation for training neural networks.

It’s obvious that any deep learning model needs to have some sort of numeric input to work with. In computer vision its not a problem as we have pixel values as inputs but in Natural language processing we have text...

This article talks about the Reformer architecture in NLP which solves the memory efficiency challenges faced by Transformers architecture for long sequences.

How can we compress and transfer knowledge from a bigger model or ensemble of models(which were trained on very large datasets to extract structure from data) to a single small model without much dip in performance?

This article talks about how can we use pretrained language model BERT to do transfer learning on most famous task in NLP - Sentiment Analysis